50 Cloud Backup Stats You Should Know In 2024

We’ve collected the latest cloud backup and recovery statistics to help you keep up to date on the best ways to secure your data.

Almost every organization in the world currently hosts at least some of their IT infrastructure in the cloud. The cloud promises flexibility, scalability, and reduced costs. For all its benefits, if it is not properly configured and secured, cloud environments can be vulnerable to all the cyber threats that traditionally targeted on-premises environments. This could include malware and social engineering threats, with attackers gaining access to a wealth of data and resources.

When such an attack occurs, it’s the responsibility of the organization—not the cloud service provider—to have created backups of their data for restoration purposes, allowing them to resume business operations. Unfortunately, 43% of IT decision-makers falsely believe that cloud providers are responsible for protecting and recovering public cloud data. This misconception can lead to increased vulnerability when it comes to cloud-based cyberthreats – organizations may fail to properly secure their data or implement adequate disaster recovery plans.

Backups are one of the most important and effective security measures commonly used. They don’t prevent your organization from being breached, but they can help you recover more quickly when your systems are compromised. Despite this, only 24% of organizations have a mature disaster recovery plan that’s well-documented, tested, and updated.

We’ve collected the most recent cloud backup and recovery statistics from around the world to illustrate the importance of backing up your cloud data. These stats come from third-party surveys and reports, and we’ll be updating them as new research emerges to help you stay on top of the latest figures.

The Frequency Of Cloud Data Breaches

According to a recent survey, 79% of companies have experienced at least one cloud data breach, and 43% have reported 10 or more breaches in recent years. Considering that 92% of organizations are currently hosting at least some of their data in the cloud, that means the majority of all businesses around today have experienced a cloud data breach.

Almost half of all data breaches occur in cloud-based systems. Part of the reason for this lies in the fact that many organizations were forced to migrate to the cloud rapidly during the COVID-19 pandemic, enabling employees to continue working from wherever they’re based. This fast rate of adoption meant that many businesses have overlooked security in the name of productivity; thus, they were using tools that were “lifted and shifted” from on-prem environments rather than being purpose-built for the cloud. In fact, 54% of businesses use applications that have been moved to the cloud from an on-prem environment, with the most common culprits being larger enterprises, with SMBs more likely to have taken a cloud-native approach.

Since then, many organizations have been retroactively securing their cloud environments to protect themselves against cloud-based cyberthreats. But what exactly are the biggest causes of data loss within cloud environments?

How Businesses Are Being Breached

76% of organizations have experienced critical data loss, and 45% of those businesses lost their data permanently as a result. Some of the most commonly cited causes of data breaches include human error, cyber-attacks (specifically ransomware and account takeover), hardware failures, and natural disaster.

Human Error

Human error is one of the leading causes of data breaches. In fact, a recent report from ITIC found that 64% of downtime events are related to human errors, such as device mismanagement or misconfiguration, and inadvertent data loss.

According to Verizon’s most recent DBIR, misconfiguration errors are the second most common cause of breach.

Misconfiguration is when someone sets up computing assets incorrectly, leaving them vulnerable to malicious activity and making it more difficult for security teams to identify breaches. One of the main misconfiguration mistakes is the incorrect setup of access permissions, which often results in users being granted permission to access more areas of the network than they actually need.

Unfortunately, this is a very common problem: more than 90% of cloud identities are using less than 5% of the permissions they’ve been granted, and 33% of all folders used by a company are open to everyone. In other words, 64% of employees have access to 1,000 or more sensitive files, on average.

Attackers can exploit accounts with misconfigured permissions to gain access to critical company data without being detected by security teams.

Data deletion is another common cause of compromise that often stems from human error. A recent survey found that malicious deletion is the most common cause of SaaS data loss, responsible for 25% of incidents, while accidental deletion is responsible for 20%.

Another survey reported that 67% of Americans who own a computer admit to having accidentally deleted something, and 44% report having lost access to their data when a shared drive or synced drive was deleted.

Account Takeover Attacks

While human error and misconfiguration are the second leading cause of breach, Verizon’s report gave the top spot to hacking.

In the last year, 75% of organizations globally experienced a social engineering attack, also known as “phishing”. In a phishing attack, a bad actor contacts their target—usually via email—posing as a trusted sender, such as a colleague or service provider. During their communication, the attacker tries to manipulate their target into handing over sensitive data directly, downloading malware, or entering their credentials on a fake phishing page disguised to look like a legitimate domain. This last one is a particular problem when it comes to cloud data, as content delivery networks and cloud file share services enable their customers to host their own content on a legitimate domain. This gives genuine users increased storage, but it also gives cybercriminals a seemingly legitimate domain on which they can host malicious files.

Ransomware

Ransomware is on the rise. Two out of three midsize organizations have suffered from a ransomware attack in the last 18 months. In the past year, there has been an 85% increase in incidents that involved employees having their names and other personal data published on the dark web, and a 144% increase in the average ransom demand. So, not only are ransomware attacks on the rise, but they’re becoming increasingly expensive for victims to recover from.

59% of ransomware incidents where the data is successfully encrypted involve data in the public cloud; either the data is stolen from the public cloud, or the attacker stores the stolen data in the public cloud while they wait for their ransom payment. The most common cloud storage services used for this purpose are Google Drive, Amazon S3, and Mega.nz. By sending exfiltrated data to legitimate services such as these makes it more difficult for the victim to locate their data.

And unfortunately, 97% of modern ransomware incidents attempt to infect primary systems, in addition to backup repositories. This statistic highlights the need for a secure backup service that includes anti-tampering and ransomware detection technology, and a disaster recovery plan that is regularly tested and updated.

Hard Drive Failure

Hard drive failures and service outages are a common cause of downtime and data loss, with service outages causing 22% of SaaS data loss. And these incidents are more common than you might think; 48% of Americans have had an external hard drive crash, and 21% of those crashed have occurred in the last year.

Almost a quarter of organizations associate old and inadequate server hardware with reliability issue and downtime—while that doesn’t necessarily mean permanent data loss, it can still cause huge disruptions to business operations. In the event of extended downtime, it can be very useful to have backups from which you can continue to work.

Natural Disaster

While only 5% of business downtime is caused by natural disasters such as floods, fires, and earthquakes. These disasters can prove incredibly difficult to recover from unless you have backups in place, as they can destroy entire physical servers.

However, you can’t rely on just any backups to prevent your organization losing data to a natural disaster—they must be stored in a separate location, such as a cloud environment. If you store your backups locally, they could be destroyed in the same disaster that takes down your primary systems.

Current Use Of Backup And Recovery

Despite the risks that the above incidents pose, many organizations still aren’t properly backing up their data. Let’s take a closer look at the numbers.

How Frequently Are Businesses Backing Up Their Data?

One third of organizations only have poorly documented disaster recovery plans in place, and 41% don’t update their plans. When it comes to small- and medium-sized business specifically, 51% don’t have an incident response plan at all.

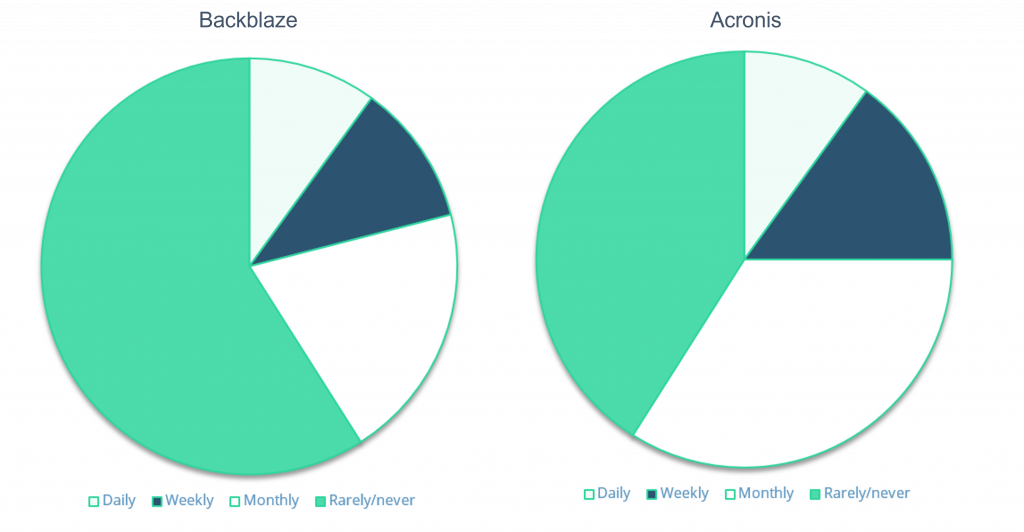

With that in mind, it comes at little surprise that only 10% of IT users back up their computer data daily; a statistic corroborated by two independent studies conducted by Backbaze and Acronis. According to Backbaze, 11% of users create backups weekly, 20% create backups monthly, 13% create them yearly, 26% create them less than once a year, and 20% have never backed up their data.

Unfortunately, Acronis’ report shows similar findings: 15% of users back up once or twice a week, 34% back up monthly, and a staggering 41% of users rarely or never back up their data.

In the charts below, we’ve combined Backbaze’s results for “yearly”, “less than yearly” and “never” for a more direct comparison.

Acronis’ report found that IT managers are a little better at backing up their data than most IT users; a third of IT managers create backups weekly and 25% create monthly backups. Additionally, 20% of IT managers claim to also test the restoration of their backups weekly.

What Data Is Being Backed Up?

According to a recent study, 91% of organizations use some form of backup to protect their databases. But unfortunately, not all types of data are given the same level of security; 62% of organizations back up proprietary application data, but only 28% back up research and development data. Despite the increasing reliance on SaaS applications amongst modern businesses, fewer than one in five organizations back up their SaaS data. Considering that almost half of SaaS tool users have experienced data loss, this figure is quite alarming—but it usually comes down to the common misconception that SaaS providers will back up the data you store in their application for you.

Unfortunately, this isn’t the case. SaaS applications are built on a shared responsibility model, which means that the SaaS provider is responsible for the infrastructure of the application (e.g., the datacenter, network controls, applications, virtualization, and operating system), and will resolve any issues related to software failure or downtime. You, the customer, are responsible for protecting your data against loss or damage caused by human error, programmatic error, or threat actors.

Additionally, while some SaaS applications offer limited backup and recovery services, organizations shouldn’t rely solely on these as they often only store data for a limited amount of time. Microsoft 365, for example, only stores data for an average of 60-90 days; it’s likely because of this that only 15% of the 74% of organizations relying on Microsoft 365 for backup could recover 100% of their data following a data loss incident.

Where Are Businesses Storing Their Backups?

Best practices for backup and recovery state that you should create at least three copies of your data, store them on at least two different storage media, and store at least one copy off-site. This is called the “3-2-1” backup rule. Having at least three copies of your data ensures that you can restore your data, even if both the original and one of the backup copies is destroyed. It is worth bearing this in mind as 97% of modern ransomware incidents attempt to infect backup repositories as well as primary systems. Storing your backups on two different media types (e.g., in your on-prem data center and in the cloud) ensures that no single point of failure can destroy all your backup copies. By storing one copy off-site, you can ensure that a natural disaster or other catastrophic physical event doesn’t destroy all copies of your data.

Unfortunately, only 15% of IT managers and 12% of IT users are following these best practices, with many failing to store their backups both locally and in a cloud storage facility. Over the past four years, the use of cloud backup has increased from 28% in 2019 to 54% in 2022, but the use of local backups has been in rapid decline—from 62% in 2019 to just 33% in 2022.

This trend is in line with global spending for on-prem vs. cloud infrastructure. Traditionally, organizations spent far more on physical data centers than on cloud infrastructure. However, this has changed in recent years. In 2021, spending on data center hardware and software reached $98.5 billion—a figure that is little over half of the record-breaking total cloud infrastructure spend at $178 billion.

But even for smaller organizations with reduced storage needs (and limited budgets), it’s a good idea to invest in a hybrid backup model that creates both cloud and local backups, not only for increased security but also to minimize the cost of a data breach. According to recent research, data breaches cost 26% less on average for organizations using a hybrid cloud data storage model, compared to a public cloud environment.

The Effectiveness Of Cloud Backups

Having robust, secure backups in place is the only way to fully recover from a data loss incident such as a natural disaster, accidental deletion, or a ransomware attack. Some organizations are misled to believe that, in the case of ransomware, it’s easier to just pay the ransom than to spend time and money creating and restoring backups. However, it’s important you remember that you’re dealing with a criminal, after all, so paying the ransom does not guarantee the safe return of your data. In fact, only around 8% of ransomware victims that pay the ransom recover all their data, and the average ransomware victim loses around 35% of their data. Additionally, paying the ransom doesn’t ensure that all traces of malware are removed from your systems, leaving you vulnerable to a second attack; it could also paint you as an easy target to other ransomware gangs.

However, as with any cybersecurity solution, backup and recovery solutions must be correctly configured and regularly tested to ensure their effectiveness. On average, only 57% of backups are successful and 61% of restores are successful. To maximize the rate of success, we recommend that organizations regularly test their cloud backup solution’s ability to create backups, and its ability to carry out data restoration at multiple levels (e.g., single file restores and full system recovery).

The Impact Of A Data Breach

Data breaches can have multiple simultaneous negative impacts on an organization, including operational downtime, reputational damage, and financial loss suffered during the recovery, as well as through compliance fines and legal fees.

The average cost of a data breach is 3.86 million US dollars. However, breaches that take place in a hybrid cloud environment cost far less on average than private cloud environments ($4.24 million USD) or public cloud environments ($5.02 million USD). So, following the best practice of utilizing a hybrid cloud storage model for your backups can help reduce the cost of a breach, should you suffer one. It can also help minimize the breach lifecycle, meaning that you could get back on your feet more quickly than were you to adopt a solely public or private cloud model.

And considering that 96% of IT managers and decision-makers have experienced at least one instance of operational downtime in the past three years—with many of them experiencing multiple outages— minimizing downtime should be near the top of all IT managers’ business continuity and disaster recovery plans.

Current Cloud Backup Trends

The cloud backup market has been increasing steadily in recent years. Thanks to the increase in cloud adoption globally, there is a need for agility and innovation within the cloud backup market. As such, the cloud backup market is rapidly expanding. In 2017, the market size stood at $1.2 billion USD; today, it stands at $4.5 billion USD, and it’s expected to grow to $13.85 billion by 2028. This gives it a compound annual growth rate of 24.84%.

We are producing more data today than ever before so, within that market growth, we can expect cloud backup providers to focus on developing and improving specific features to support the increasing demand for low data backup and storage. These include automated backups, file-level recovery, point-in-time restore, and encrypted cloud storage.

How To Protect Your Cloud Data With Backup And Recovery

As more organizations migrate to the cloud or expand their use of cloud services and applications, cloud data will become an increasingly attractive target for cybercriminals. As such, it’s more important than ever before that organizations invest in a secure, robust backup and recovery tool to help them get back on their feet in the event of a data loss incident.

The best backup and recovery tools for cloud data offer the following features:

- Compatibility with the SaaS apps that your organization is storing data in, such as Microsoft 365, Google Workspace, and Salesforce

- Automated daily backups

- Granular search and filtering capabilities

- Individual file and full system restoration options

- Customizable retention periods and storage limits

- Encryption, role-based access, and multi-factor authentication

- Reporting on the status of any backup or restoration activity

Remember, it’s not enough to just buy a backup and recovery tool and leave it to run itself. It’s important that your backup solution is correctly configured, and operating as you expect it to. Otherwise, you might not know how to recover your data, or the backup interval may be too great to be effective. You should test your backup solution regularly to ensure that it’s working properly. By taking these steps, you increase your chances of a successful recovery a limit the potential downtime in the aftermath of an accident, disaster, or breach.

How Else Can You Protect Your Cloud Data?

As well as implementing a backup and recovery solution for your cloud data, it’s important that your organization has a business continuity and disaster recovery plan. This plan should be regularly tested to make sure it works and that it is as effective as it can be.

Failing to test your disaster recovery plan may create a false sense of security and leave you vulnerable to attacks. You may have missed a critical area to backup or find that work practices have changed since you first created the plan that render it unusable or ineffective. It could be that a person responsible for carrying out the post-disaster security audit leaves the organization.

On the other hand, organizations that have an incident response team in place and regularly test their recovery plans save an average of $2.66 million USD when they experience a disaster.

Despite this, only 54% of organizations currently have a documented disaster recovery plan in place and, of those that do, half of them only test those plans once a year or less frequently, and 7% never test them at all.

For more guidance on creating an effective incident response plan, read our guide on how to respond to a data breach.

Want to find out more about how you can protect your cloud data? Check out our buyers’ guides to the top backup and recovery solutions that will help you secure and restore your cloud data: